Challenge configuration¶

Following fields are required (and can be customized) in the challenge_config.yml.

title: Title of the challenge

short_description: Short description of the challenge (preferably 140 characters max)

description: Long description of the challenge (use a relative path for the HTML file, e.g.

challenge_details/description.html)evaluation_criteria: Evaluation criteria and details of the challenge (use a relative path for the HTML file, e.g.

challenge_details/evaluation.html)terms_and_conditions: Terms and conditions of the challenge (use a relative path for the HTML file, e.g.

challenge_details/tnc.html)image: Logo of the challenge (use a relative path for the logo in the zip configuration, e.g.

images/logo/challenge_logo.jpg). Note: The image must be in jpg, jpeg or png format.submission_guidelines: Submission guidelines of the challenge (use a relative path for the HTML file, e.g.

challenge_details/submission_guidelines.html)evaluation_script: Python script which will decide how to evaluate submissions in different phases (path of the evaluation script file or folder relative to this YAML file. For e.g.

evaluation_script/)remote_evaluation: True/False (specify whether evaluation will happen on a remote machine or not. Default is

False)start_date: Start DateTime of the challenge (Format: YYYY-MM-DD HH:MM:SS, e.g. 2017-07-07 10:10:10) in

UTCtime zoneend_date: End DateTime of the challenge (Format: YYYY-MM-DD HH:MM:SS, e.g. 2017-07-07 10:10:10) in

UTCtime zonepublished: True/False (Boolean field that gives the flexibility to publish the challenge once approved by EvalAI admin. Default is

False)allowed_email_domains: A list of domains allowed to participate in the challenge. Leave blank if everyone is allowed to participate. (e.g.

["domain1.com", "domain2.org", "domain3.in"]Participants with these email domains will only be allowed to participate.)blocked_emails_domains: A list of domains not allowed to participate in the challenge. Leave blank if everyone is allowed to participate. (e.g.

["domain1.com", "domain2.org", "domain3.in"]Participants with these email domains will not be allowed to participate.)leaderboard: A leaderboard for a challenge on EvalAI consists of following subfields:

- id: Unique positive integer field for each leaderboard entry

- schema: Schema field contains the information about the rows of the leaderboard. A schema contains two keys in the leaderboard:

labels: Labels are the header rows in the leaderboard according to which the challenge ranking is done.default_order_by: This key decides the default sorting of the leaderboard based on one of the labels defined above.

The leaderboard schema for the sample challenge configuration looks like this:

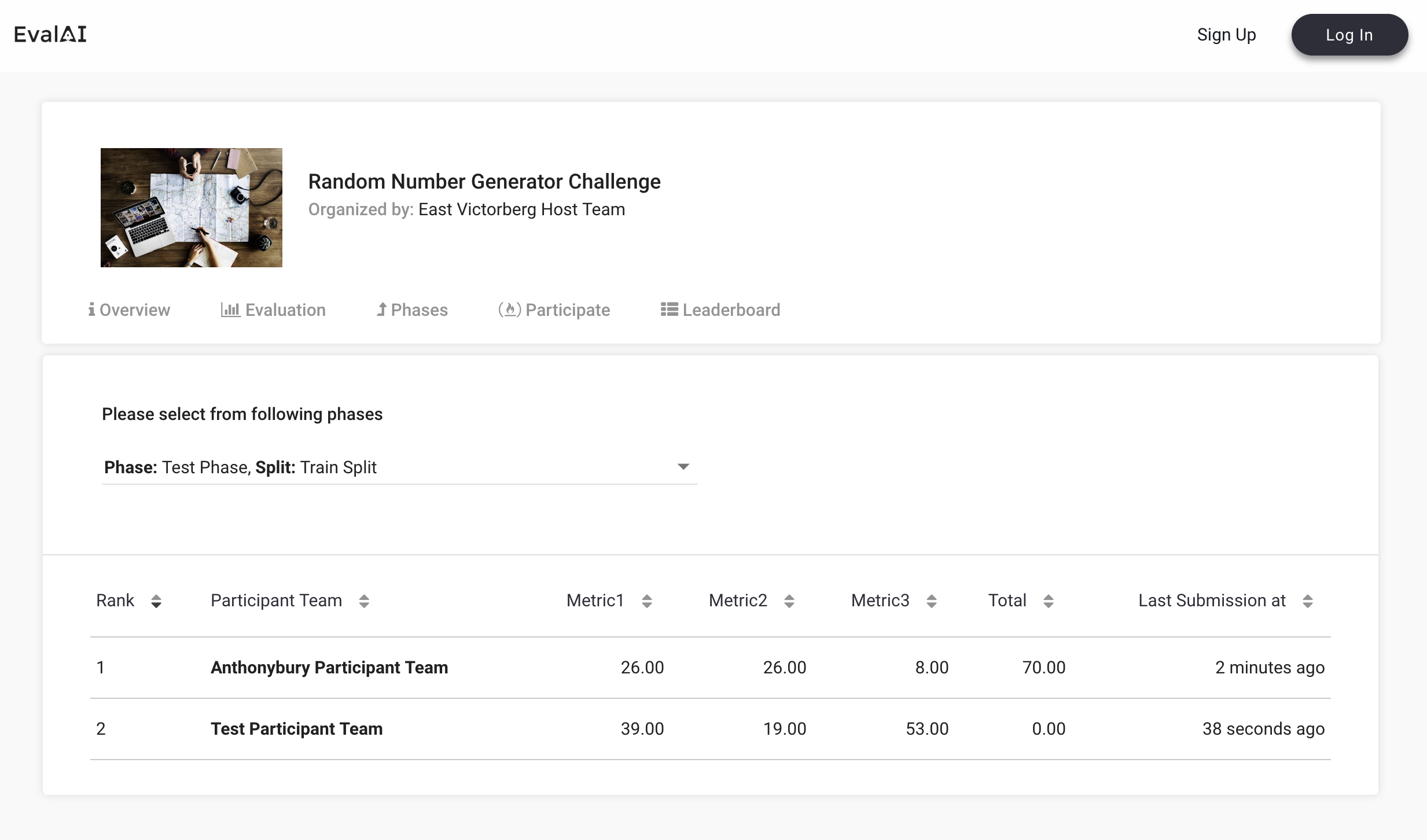

leaderboard: - id: 1 schema: { "labels": ["Metric1", "Metric2", "Metric3", "Total"], "default_order_by": "Total", }

The above leaderboard schema will look something like this on leaderboard UI:

challenge_phases:

There can be multiple challenge phases in a challenge. A challenge phase in a challenge contains the following subfields:

- id: Unique integer identifier for the challenge phase

- name: Name of the challenge phase

- description: Long description of the challenge phase (set the relative path of the HTML file, e.g.

challenge_details/phase1_description.html) - leaderboard_public: True/False (a Boolean field that gives the flexibility to Challenge Hosts to either make the leaderboard public or private. Default is

False) - is_public: True/False (a Boolean field that gives the flexibility to Challenge Hosts to either hide or show the challenge phase to participants. Default is

False) - is_submission_public: True/False (a Boolean field that gives the flexibility to Challenge Hosts to either make the submissions by default public/private. Note that this will only work when the

leaderboard_publicproperty is set to true. Default isFalse) - start_date: Start DateTime of the challenge phase (Format: YYYY-MM-DD HH:MM:SS, e.g. 2017-07-07 10:10:10)

- end_date: End DateTime of the challenge phase (Format: YYYY-MM-DD HH:MM:SS, e.g. 2017-07-07 10:10:10)

- test_annotation_file: This file is used for ranking the submission made by a participant. An annotation file can be shared by more than one challenge phase. (Path of the test annotation file relative to this YAML file, e.g.

challenge_details/test_annotation.txt) - codename: Unique id for each challenge phase. Note that the codename of a challenge phase is used to map the results returned by the evaluation script to a particular challenge phase. The codename specified here should match with the codename specified in the evaluation script to perfect mapping.

- max_submissions_per_day: Positive integer which tells the maximum number of submissions per day to a challenge phase.

- max_submissions_per_month: Positive integer which tells the maximum number of submissions per month to a challenge phase.

- max_submissions: a Positive integer that decides the overall maximum number of submissions that can be done to a challenge phase.

dataset_splits:

Dataset splits define the subset of test-set on which the submissions will be evaluated on. Generally, most challenges have two splits:

- test-dev (Allow participants to make large number of submissions, let them see how they are doing, and let them overfit)

- test-challenge (Allow small number of submissions so that they cannot mimic test-set. Use this split to decide the winners for the challenge)

A dataset split has the following subfields:

- id: Unique integer identifier for the split

- name: Name of the split (it must be unique for every split)

- codename: Unique id for each split. Note that the codename of a dataset split is used to map the results returned by the evaluation script to a particular dataset split in EvalAI’s database. Please make sure that no two dataset splits have the same codename. Again, make sure that the dataset split’s codename match with what is in the evaluation script provided by the challenge host.

challenge_phase_splits:

A challenge phase split is a relation between a challenge phase and dataset splits for a challenge (many to many relation). This is used to set the privacy of submissions (public/private) to different dataset splits for different challenge phases.

- challenge_phase_id: Id of

challenge_phaseto map with - leaderboard_id: Id of

leaderboard - dataset_split_id: Id of

dataset_split - visibility: It will set the visibility of the numbers corresponding to metrics for this

challenge_phase_split. Select one of the following positive integers based on the visibility level you want: - leaderboard_decimal_precision: Positive integer field used for varying the leaderboard decimal precision. Default value is

2. - is_leaderboard_order_descending: True/False (a Boolean field that gives the flexibility to challenge host to change the default leaderboard sorting order. It is useful in cases where you have error as a metric and want to sort the leaderboard in increasing order of error value. Default is

True)

- challenge_phase_id: Id of

| Visibility | Description |

|---|---|

| 1 | Only visible to challenge host |

| 2 | Only visible to challenge host and participant who made that submission |

| 3 | Visible to everyone on leaderboard |